-

The Best Way for Getting the Wedding Reviews Malaysia

The role of the wedding reviews Malaysia is really important. It becomes the source for all of the information needed relating to the wedding ceremony today. Even if you have the experience before for doing it, you will need the wedding review Malaysia to keep your idea about wedding as the updated one. Based on […]

November 11, 2015 / Sophie / Comments Off on The Best Way for Getting the Wedding Reviews Malaysia

Read More » -

Planning the Perfect Wedding in Johor Bahru and Some Great Facilities there

The wedding in Johor Bahru needs the special different preparation then the wedding party held in other places in Malaysia. It is caused by the fact about so many facilities can be found there. It is too bad for you to compose the simple plan there since the facilities are possible to be used for […]

November 6, 2015 / Sophie / Comments Off on Planning the Perfect Wedding in Johor Bahru and Some Great Facilities there

Read More » -

Wedding Jewellery Malaysia and Some Important Aspect to be considered

So many options about the wedding Jewellery Malaysia can be found easily in nowadays market. You do not need to feel afraid about the hard way for finding the appropriate style of the Jewellery desired. It becomes easy today for making the appropriateness between the Jewellery used and the theme used. When the appropriateness between […]

October 29, 2015 / Sophie / Comments Off on Wedding Jewellery Malaysia and Some Important Aspect to be considered

Read More » -

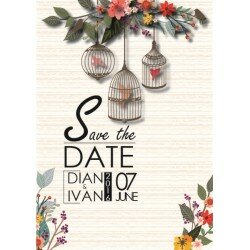

Wedding Stationery Malaysia Tips

Before you hold a wedding, one of the most important preparations is the wedding stationery. It can include wedding invitation and wedding cards. Both wedding invitation and wedding card have the same purpose that is to invite and attract people to come to your wedding. That is why wedding invitation and wedding card should be […]

October 22, 2015 / Sophie / Comments Off on Wedding Stationery Malaysia Tips

Read More » -

The Latest Wedding Hairstyle Trends

Marriage is so sacred, special, and certainly very dream of every couple who are ready to get married. In this event the whole big family along with invited guests present to witness the appearance of the bride married couples. Therefore, you as a bride should pay attention to your appearance start head to toe. This […]

October 12, 2015 / Sophie / Comments Off on The Latest Wedding Hairstyle Trends

Read More » -

The Introduction of Top 10 Wedding Photographers

As a special event in people’s life, commonly they want something which can bring wedding appearance all of their day. Therefore, it is important to prepare picture documentary drawing your romantic moments with your sweetheart. We have collected top 10 wedding photographers which can encourage your desire to have a wedding documentary which is sensational. […]

September 23, 2015 / Sophie / Comments Off on The Introduction of Top 10 Wedding Photographers

Read More » -

Wedding Dress Malaysia Can You Find In Easy and Still in Suitable Design

Get your wedding party is means you must prepare all of the things in good preparation. One best important thing that you must choose in good preparation is about your wedding dress. For you who want your wedding party in Malay concept, you are must make your wedding dress in Malay concept too. Wedding dress […]

September 16, 2015 / Sophie / Comments Off on Wedding Dress Malaysia Can You Find In Easy and Still in Suitable Design

Read More » -

Considering the Kinds Wedding Package Malaysia for the Best Moment

Wedding is kind of the sacral ceremony. Well, the wedding will be the new phase of people life that will be very meant for them. That is why people always being busy in preparing the wedding moment. Actually, there are some matters that are important to be considering about the wedding. Well, it is the […]

September 11, 2015 / Sophie / Comments Off on Considering the Kinds Wedding Package Malaysia for the Best Moment

Read More » -

White Wedding Theme Is Best Wedding Theme That You Must Choose

Wedding theme can be most important thing that you must decide in good concept. You will get some of different wedding theme that can you choose. Different wedding theme will make your wedding party in best looking. One wedding theme that becomes best recommendation is white wedding theme. This one wedding theme will really offer […]

September 7, 2015 / Sophie / Comments Off on White Wedding Theme Is Best Wedding Theme That You Must Choose

Read More » -

Chinese Wedding Malaysia to Know

The unique culture you can find in Malaysia more especially concerning to the Chinese wedding Malaysia. This collaboration of Chinese and Malay culture makes the wedding celebration. Furthermore, there are some interesting traditions to pay attention so the wedding celebration will complete and perfect. For your information and enlarging your knowledge, we complete this site […]

September 7, 2015 / Sophie / Comments Off on Chinese Wedding Malaysia to Know

Read More »

Recent Posts

- The Best Way for Getting the Wedding Reviews Malaysia

- Planning the Perfect Wedding in Johor Bahru and Some Great Facilities there

- Wedding Jewellery Malaysia and Some Important Aspect to be considered

- Wedding Stationery Malaysia Tips

- The Latest Wedding Hairstyle Trends

- The Introduction of Top 10 Wedding Photographers

- Wedding Dress Malaysia Can You Find In Easy and Still in Suitable Design

- Considering the Kinds Wedding Package Malaysia for the Best Moment

- White Wedding Theme Is Best Wedding Theme That You Must Choose

- Chinese Wedding Malaysia to Know

Tag Cloud

-

airbrush makeup airbrush wedding makeup bathroom beautiful Beauty & Makeup become a wedding wedding planner best choice Birthday Flowers Bridal Makeup Butterfly Decorations catering business Celebrities Bridal Makeup cheap Cheap flowers decoration cheap jewelry chinese traditions Chinese wedding Malaysia Decorations Decorations Idea DIY Birthday Flowers Graduation Party inspirations jewelry jewels makeup malaysia wedding stories suggestion for wedding planner Tips for Brides and Grooms top 10 wedding photographers tranditions wedding wedding bathroom Wedding Car Ideas wedding car leasing wedding catering Wedding dress Malaysia wedding hairstyle Wedding Makeup wedding malaysia wedding package Malaysia wedding party wedding planner white wedding theme wedding my

Copyright © 2021 Pre Wedding Guide